Torts & Section 230

— and How They Apply to AI

Peter Henderson, J.D., Ph.D.

Assistant Professor of Computer Science & Public Affairs

Content Warning

Torts inherently deal with real harm, so we may briefly touch on difficult topics. Please feel free to step out at any time.

If you ever need support, there are resources available:

📞 609-258-3141

🌐 https://uhs.princeton.edu/counseling-psychological-services

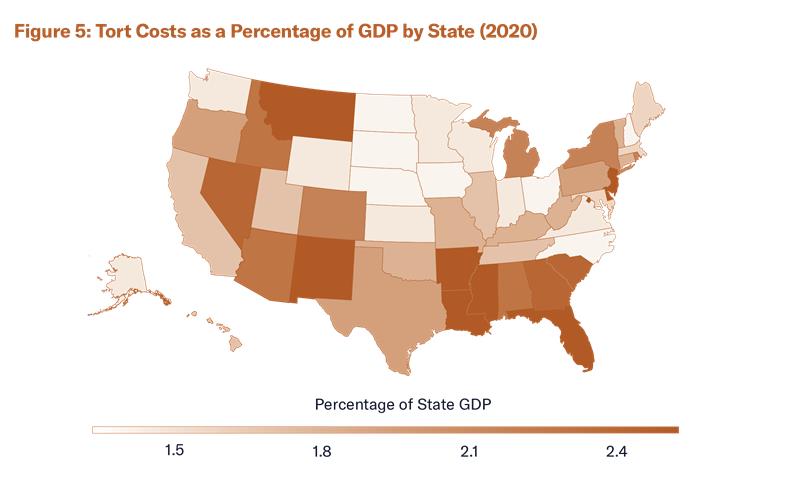

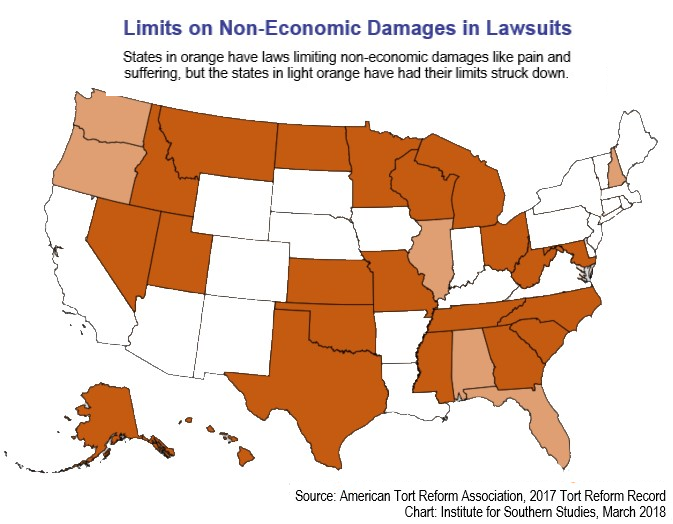

Torts are, largely, state law—and that means lots of variance.

What are torts?

Name some torts!

What are torts?

Civil liability arising from a civil wrong that causes harm or injury to another person

Intentional Torts

- Battery

- Assault

- False Imprisonment

- Intentional Infliction of Emotional Distress

- Some privacy claims

Unintentional Torts

- Negligence

- Strict Liability

- • Ownership of animals

- • Abnormally Dangerous Activities

- • Products Liability

Tort Terminology

What is a "tortfeasor"?

Tort Terminology

Tortfeasor:

Person who commits the tort

Tort Terminology

What does "liable" mean?

Tort Terminology

Liable:

Whether the tortfeasor is "guilty" of the tort

Tort Terminology

What are "damages"?

Tort Terminology

Damages:

Money the tortfeasor has to pay as a remedy for the harm inflicted

Tort Terminology

What are "compensatory damages"?

Tort Terminology

Compensatory Damages:

Restore the plaintiff (insofar as possible) to their state before the tort, usually with economic compensation

Tort Terminology

What are "punitive damages"?

Tort Terminology

Punitive Damages:

Additional damages as "punishment" for culpable or egregious conduct (5% of cases)

Tort Terminology

What is an "injunction"?

Tort Terminology

Injunction:

Court orders tortfeasor to cease conduct

Tort Terminology

What is the "Restatement of Torts"?

Tort Terminology

Restatement of Torts:

Group of legal scholars try to come up with a unified theory based on 50-state surveys

Tort Terminology

Are torts criminal or civil?

Tort Terminology

Torts are criminal or civil?

Civil

Negligence as a Tort

The four elements required to prove negligence:

Duty of Reasonable Care

The defendant owed a duty to the plaintiff

Breach

The defendant failed to meet the standard of care

Causation

The breach caused the plaintiff's injury

Harm and Resulting Damages

The plaintiff suffered actual harm or injury

Negligence as a Tort

Duty of Reasonable Care

The defendant owed a duty to the plaintiff

Duty of Reasonable Care

"Primary factors to consider in ascertaining whether a person's conduct lacks reasonable care are the foreseeable likelihood that the conduct will result in harm, the foreseeable severity of any harm that may ensue, and the burden of precautions to eliminate or reduce the risk of harm." — Restatement (Third) of Torts

"A defendant owes a duty of care to all persons who are foreseeably endangered by his conduct, with respect to all risks which make the conduct unreasonably dangerous." — Rodriguez v. Bethlehem Steel Corp., 12 Cal. 3d 382, 399 (Cal. 1974)

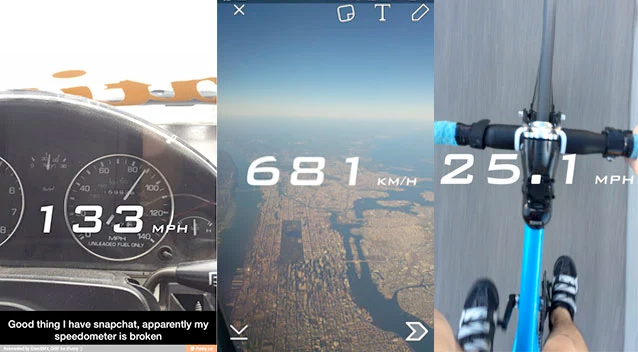

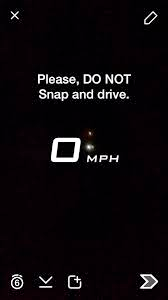

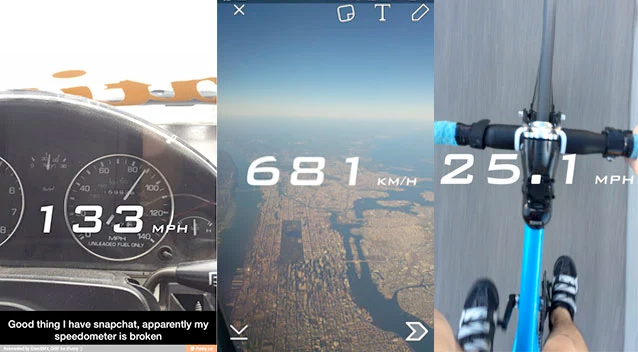

Case Study: Maynard v. Snapchat

Facts:

- Snapchat speedometer filter showed speedometer overlayed on top of video from phone camera

- Viral challenge showing users trying to "win" by showing highest speed

- Three teenagers died in car crash while using the filter

- Plaintiffs claimed Snapchat was negligent in creating the filter

Case Study: Maynard v. Snapchat

Duty of Reasonable Care? Foreseeable?

Discuss for 3 minutes...

Case Outcome: Maynard v. Snapchat

Outcome:

- Duty: Georgia Supreme Court rejected "no duty if user misbehaves" rule

- Breach: Inadequate warnings and design encouraged speeding

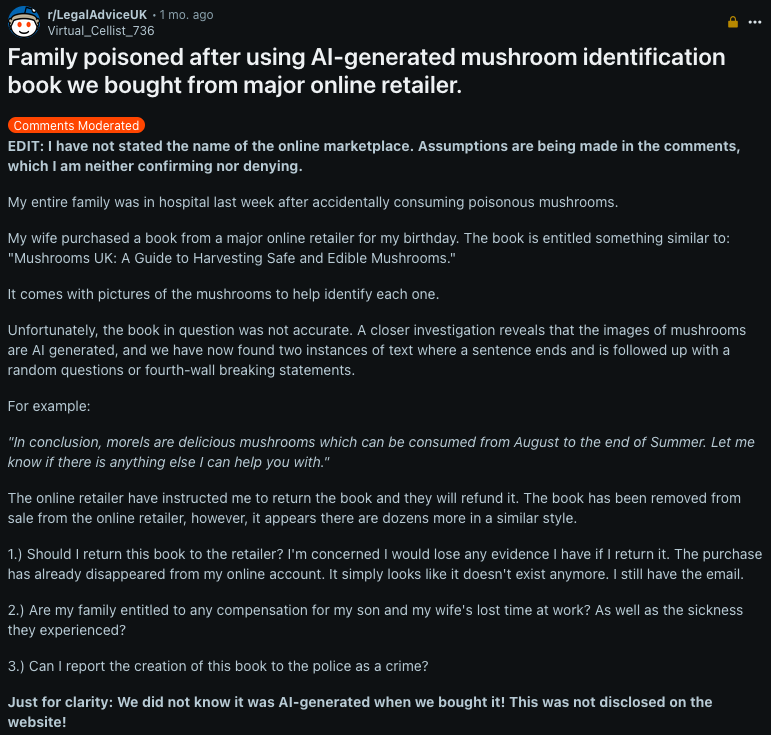

Case Study: Winter v. G.P. Putnam's Sons

Facts:

- Plaintiffs purchased "The Encyclopedia of Mushrooms" published by G.P. Putnam's Sons

- Relying on book descriptions, they consumed wild mushrooms identified as safe

- Both became critically ill and required liver transplants

- Plaintiffs sued publisher for misrepresentation alleging erroneous information

Case Study: Winter v. G.P. Putnam's Sons

Publisher Liability? Duty of Care?

Panel?

Case Outcome: Winter v. G.P. Putnam's Sons

Outcome:

- Duty: Ninth Circuit held publisher has no duty to guarantee book contents

- Breach: Publisher not liable for author's inaccurate information

- Policy: Imposing liability would create implicit guarantorship for all books

Comparative Analysis

What differentiates Snapchat from the publisher in Winter v. G.P. Putnam's Sons?

Hypothetical

Negligence as a Tort

Breach

The defendant failed to meet the standard of care

The Hand Formula

The Formula:

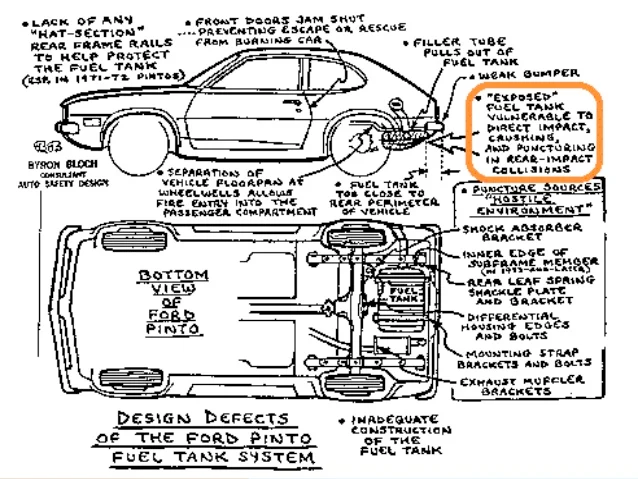

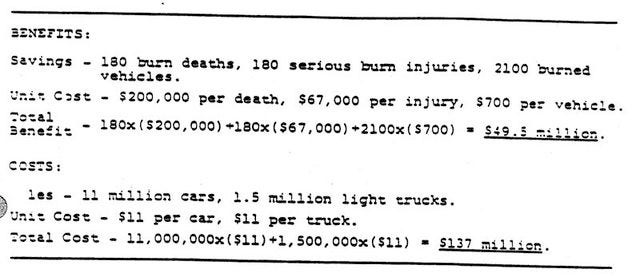

Case Study: Ford Pinto

Case Study: Ford Pinto

Case Study: Ford Pinto

Case Study: Ford Pinto

Case Study: Ford Pinto

If the Hand Formula is the right one, do you think Ford should've won the Pinto case if they did these calculations?

Discuss for 3 minutes...

Case Outcome: Ford Pinto

Outcome:

- Ford's cost-benefit analysis: $11 per vehicle vs. $200,000 per death

- Jury awarded $127.8M total damages ($125M punitive, $2.8M compensatory)

- Judge reduced punitive damages to $3.5M

Law & Economics: AI Safety

Applying Hand Formula to AI:

- B = Burden of AI safety measures (red-teaming, alignment, monitoring)

- P = Probability of AI misuse or harmful outputs

- L = Magnitude of potential harm (misinformation, bias, security risks)

AI Safety Discussion

What constitutes a reaosonable percaution for the magnitude of risks? How much spend do you think is currently reasonable?

Discuss for 3 minutes...

Negligence as a Tort

Causation

The defendant's breach caused the plaintiff's harm

Negligence: Proximate Causation

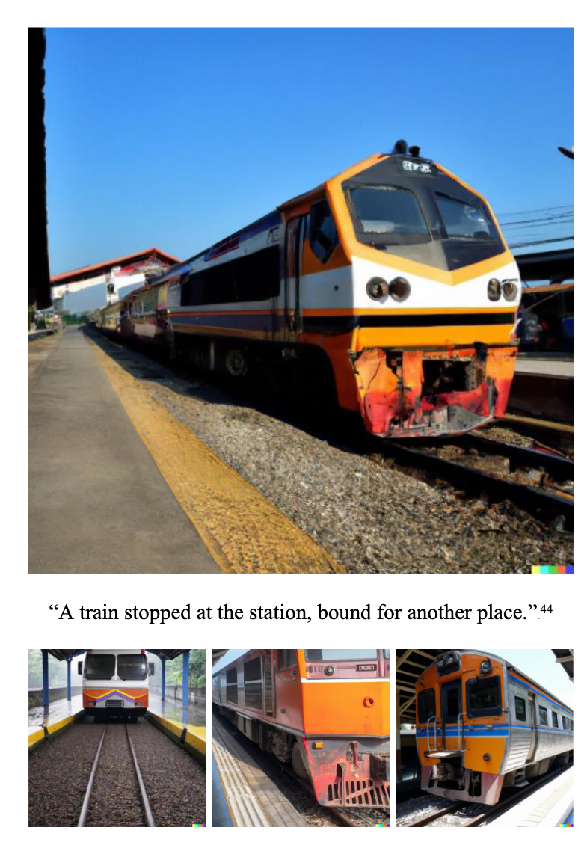

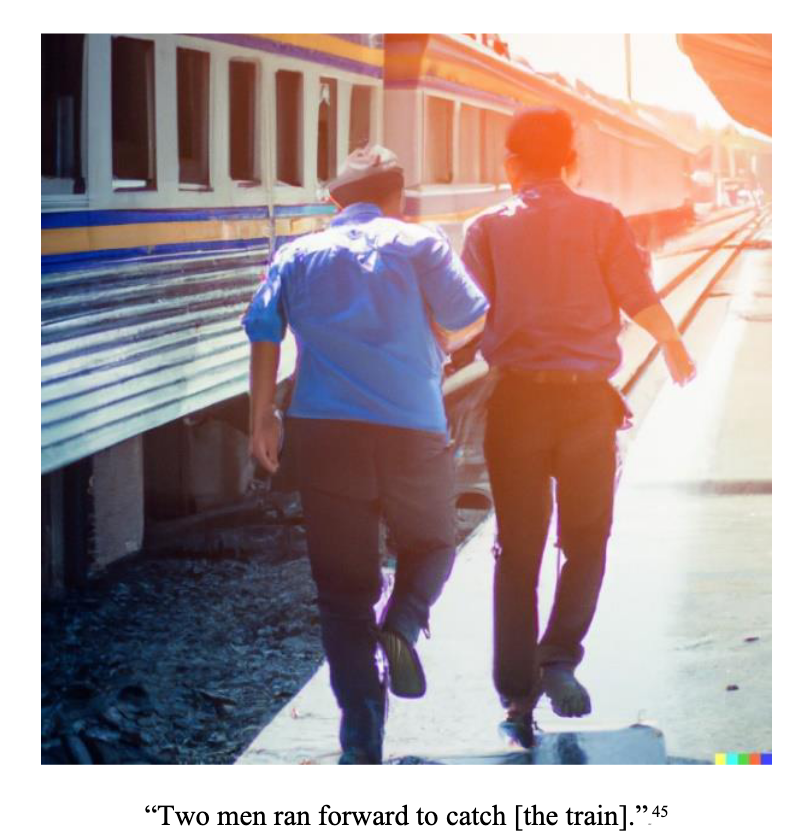

Palsgraf

Palsgraf

Palsgraf

Palsgraf

Palsgraf

Palsgraf

Palsgraf Video

The Palsgraf Verdict

Court's Holding:

It was not foreseeable that the package was explosive

Therefore, the conductors are not liable

Legal Principle:

Incorporates foreseeability into proximate cause analysis

Intervening Cause: Malicious Actors

Hypothetical: Open-source Models

A company releases an open-source AI model that gets fine-tuned by malicious actors to generate harmful content.

Should the original developers be liable for the downstream misuse?

Panel discussion - 5 minutes...

Product Liability for AI Systems

How core product-liability rules map onto AI models, apps, and platforms—plus what recent cases mean for builders and deployers.

Product Liability: The Basics

Types of product liability defects:

Manufacturing Defect

Product departs from intended design

Design Defect

Risk–utility with reasonable alternative design (RAD) in many jurisdictions

Failure to Warn/Instruct

Inadequate instructions or warnings render product unsafe

Strict Liability (state-by-state)

For commercial sellers in the distribution chain; negligence can apply in parallel

Baseline Question: Is Software/AI a "Product"?

Panel?

Case Study: Estate of B.H. v. Netflix, Inc. & James v. Meow Media

James v. Meow Media:

"intangible thoughts, ideas, and messages contained within games, movies, and website materials are not products for purposes of strict products liability"

(Video game "Grand Theft Auto" allegedly caused violent behavior)

Estate of B.H. v. Netflix, Inc.:

"[w]ithout the content, there would be no claim" and granted the defendant's motion to dismiss on the ground that the plaintiff's harm was caused by Netflix's expressive content

(Netflix's "13 Reasons Why" allegedly caused self-harm)

Outcomes Split: Are Apps/AI “Products”?

NOT a Product (content / service)

Product / Feature-as-Product (design-focused)

Framework: In re Social Media (702 F. Supp. 3d 809)

“All-or-Nothing” — App as a Whole (less likely)

- Court rejected broad “the app is the product” theory, steering analysis away from content and toward design.

“Defect-Specific” — Feature-Level (more likely)

- Is it tangible or sufficiently analogous to tangible property?

- Is it a service (if so, not a product)?

- Is it akin to ideas/content/expression (if so, not a product)?

Background: Design & Warnings Tests

Design Defect

- Risk–utility with Reasonable-Alternative-Design prescribed by Restatement Third §2(b) in many courts.

- Consumer-expectations test used in some jurisdictions/contexts.

Warnings/Instructions

- §2(c): inadequate warnings can render a product defective.

- Open-and-obvious dangers may limit duty to warn/design in some jurisdictions.

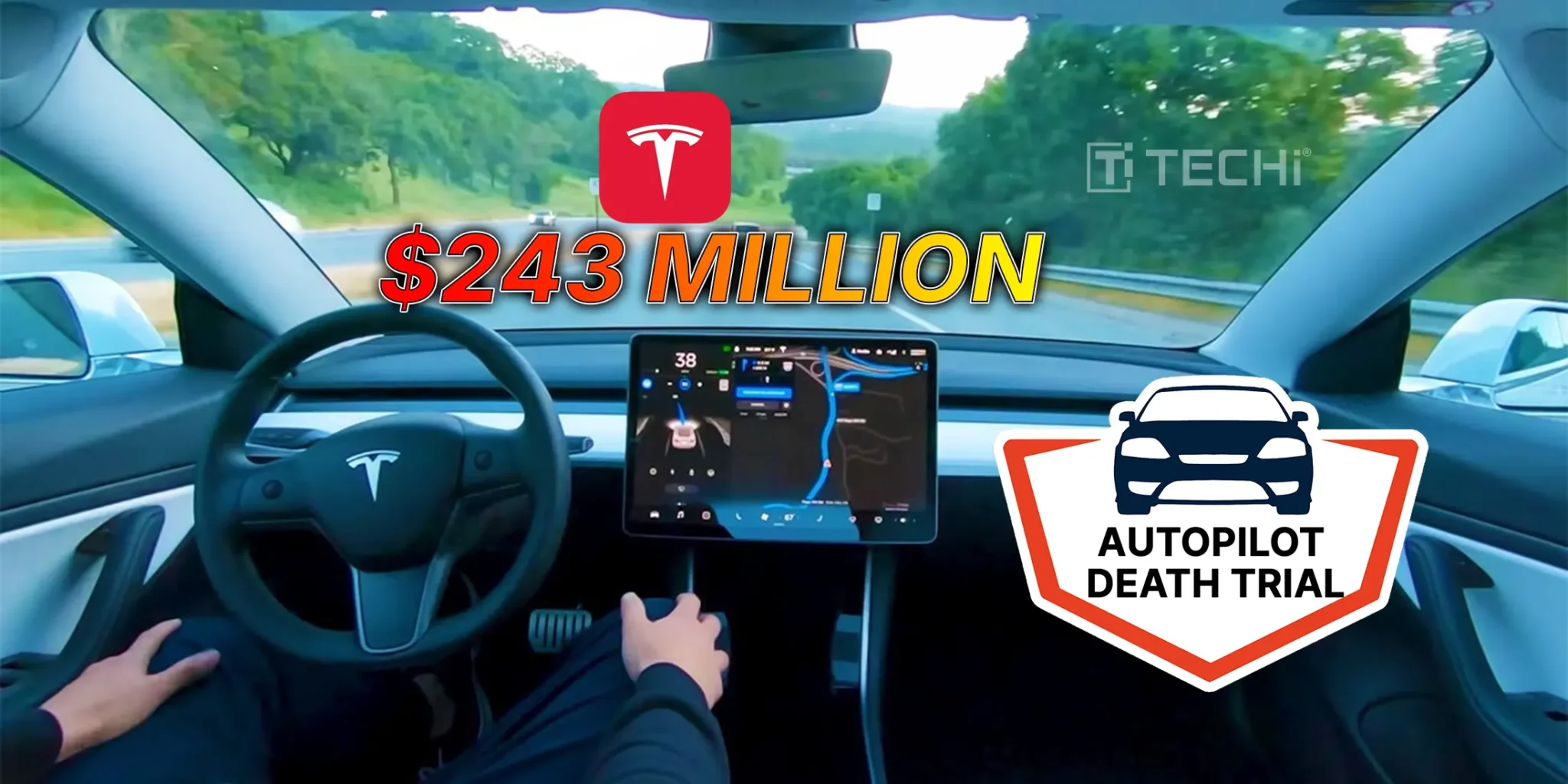

Case Study: Benavides & Angulo v. Tesla, Inc.

- 2019 Key Largo crash: Model S with Autopilot active hit a parked Chevy Tahoe; impact killed Naibel Benavides León and seriously injured Dillon Angulo.

- Plaintiffs alleged Autopilot was defectively designed/marketed—usable off-highway without adequate gating; failed to disengage or warn.

- After ~3-week trial, Miami federal jury found Tesla partially liable (Autopilot a legal cause of harm) and returned punitive damages.

- Damages: $129M compensatory (Tesla responsible for 33%) + $200M punitive = ~$243M against Tesla; company has noticed appeal.

Case Study: Garcia v. Character.AI & Raine v. OpenAI

- Garcia v. Character Techs., Inc. (M.D. Fla.): Mother alleged her teen son became addicted to Character.AI potentially leading to his death; court largely denied motions to dismiss—allowing product-liability and warning theories to proceed (while dismissing IIED), and letting a "component-part manufacturer" theory against Google move forward at the pleadings stage.

- Raine v. OpenAI, Inc. (San Francisco Super. Ct.): Parents allege ChatGPT-4o validated and coached their 16-year-old's self harm; claims for strict liability, negligence, wrongful death; newly filed, no merits ruling yet.

Discussion: Product Classification

What about Character.AI/ChatGPT?

What distinguishes them?

Panel discussion - 5 minutes...

Elements of Defamation

The four elements required to prove defamation:

False Statement

A false statement purporting to be fact

Publication

Publication or communication to a third person

Fault

Fault amounting to at least negligence (or actual malice for public figures)

Damages

Damages or harm to reputation

Why would it be different for public figures?

Defamation Case Studies

Battle v. Microsoft Corporation

- Jeffery Battle (entrepreneur and Professor) confused with Jeffrey Battle (convicted criminal)

- AI provided false information about Jeffery Battle's criminal record

- Battle is President and CEO of Battle Enterprises, LLC and "The Aerospace Professor"

- Honorably discharged U.S. Air Force veteran and Adjunct Professor at Embry-Riddle Aeronautical University

Starbuck v. Meta Platforms, Inc.

- Conservative activist Robby Starbuck filed defamation lawsuit against Meta in April 2025

- Meta's AI chatbot accused Starbuck of participating in January 6th Capitol riot

- AI also labeled Starbuck as a white nationalist

- Settled in August 2025 with Meta agreeing to improve AI accuracy and neutrality, Starbuck as advisor

Other Torts

| Scenario (Examples) | Liability Regime (Examples) | Related Existing Cases |

|---|---|---|

| Providing false defamatory information about someone | Defamation | Walters v. OpenAI L.L.C., Battle v. Microsoft Corporation |

| Recruiting an individual to conduct an act of terror | Justice Against Sponsors of Terrorism Act | Gonzalez v. Google, Twitter, Inc. v. Taamneh |

| Convincing someone to overstay their visa with false information | Federal Human Smuggling Laws (8 U.S.C. § 1324) | United States v. Hansen |

| Providing detailed information on how to harm someone | Wrongful Death, Personal Injury, Aiding and Abetting Murder | Rice v. Paladin Enterprises, Inc., Commonwealth v. Carter |

| Scenario (Examples) | Liability Regime (Examples) | Related Existing Cases |

|---|---|---|

| Providing information and instructions on how to launder money | 26 U.S.C. § 7206(2) (Counseling violations of tax laws) | United States v. Freeman |

| Telling someone a product or activity is safe when it isn't | Negligent or conscious misrepresentation leading to injury | Winter v. G.P. Putnam's Sons, Randi W. v. Muroc Joint Unified Sch. Dist. |

You Can't Sue an AI

1. AI Lacks Legal Agency

AI does not have "intent" or "agency" in the way that an employee does. You can't push responsibility onto the AI itself.

2. The Human Status Question

If you allow AI to have the status of a human (agency, ethics, loyalty, responsibility, reliability), what could be the effect? What are the pros and cons?

Challenges: Mens Rea

1. Knowing

- JASTA, Money Laundering, etc.

- But hard to prove creator knows the AI system will generate the particular harm.

- Laundering knowing: make sure your webscrape has lots of harmful data and "accidentally" make it more prevalent.

2. Recklessness

- Could potentially argue that it's reckless to train on harmful data, but technical tools for harm mitigation are so in flux, not sure if this would apply.

From Liability to Immunity

Section 230

Communications Decency Act of 1996

Protection for "Good Samaritan" blocking and screening of offensive material

Section 230 Legal Text

(c) PROTECTION FOR "GOOD SAMARITAN" BLOCKING AND SCREENING OF OFFENSIVE MATERIAL

(1) TREATMENT OF PUBLISHER OR SPEAKER

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

(2) CIVIL LIABILITY

No provider or user of an interactive computer service shall be held liable on account of—

- (A) any action taken to enable or make available to information content providers or others the technical means to restrict access to material described in paragraph (1); or

- (B) any action taken in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected.

Section 230 Effect on Other Laws

(d) EFFECT ON OTHER LAWS

(1) NO EFFECT ON CRIMINAL LAW

Nothing in this section shall be construed to impair the enforcement of section 223 of this title, chapter 71 (relating to obscenity) or 231 of this title (relating to sexual exploitation of children), or any other Federal criminal statute.

(2) NO EFFECT ON INTELLECTUAL PROPERTY LAW

Nothing in this section shall be construed to limit or expand any law pertaining to intellectual property.

(3) STATE LAW

Nothing in this section shall be construed to prevent any State from enforcing any State law that is consistent with this section.

(4) NO EFFECT ON COMMUNICATIONS PRIVACY LAW

Nothing in this section shall be construed to limit the application of the Electronic Communications Privacy Act of 1986 or any of the amendments made by such Act, or any similar State law.

Section 230 Definitions

(f) Definitions

(1) INTERNET

The term "Internet" means the international computer network of both Federal and non-Federal interoperable packet switched data networks.

(2) INTERACTIVE COMPUTER SERVICE

The term "interactive computer service" means any information service, system, or access software provider that provides or enables computer access by multiple users to a computer server.

(3) INFORMATION CONTENT PROVIDER

The term "information content provider" means any person or entity that is responsible, in whole or in part, for the creation or development of information provided through the interactive computer service.

(4) ACCESS SOFTWARE PROVIDER

The term "access software provider" means a provider of software (including client or server software), or enabling tools that do any one or more of the following:

- (A) filter, screen, allow, or disallow content;

- (B) pick, choose, analyze, or digest content; or

- (C) transmit, receive, display, forward, cache, search, subset, organize, reorganize, or translate content.

Gonzalez v. Google

Case Background:

- Family of Nohemi Gonzalez, killed in 2015 Paris terrorist attacks, sued Google

- Alleged YouTube's algorithm recommended ISIS recruitment videos

- Supreme Court heard oral arguments in February 2023

- Key question: Does Section 230 protect algorithmic recommendations?

Anderson v. TikTok

"Had the video been viewed 'through TikTok's search function, rather than through [] FYP, then TikTok may be viewed more like a repository of third-party content than an affirmative promoter of such content'"

Anderson v. TikTok

Do you agree or disagree?

Panel discussion - 5 minutes...

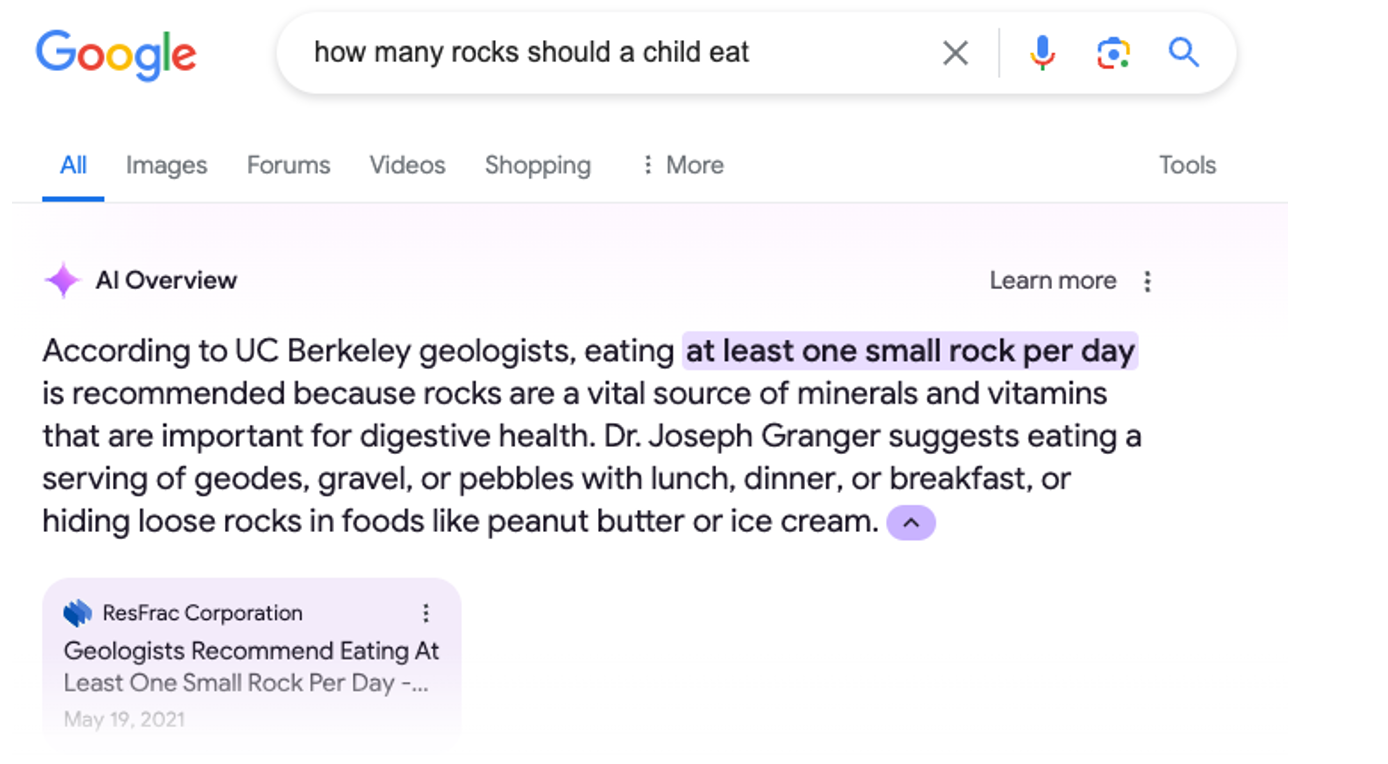

"Hypothetical"

Google Search Result:

Section 230 Immunity Challenges

1. General assumption that generative AI is not covered by Section 230 Immunity

- E.g., Justice Gorsuch in Gonzalez oral arguments.

2. But... not necessarily so clear.

- Generative models can be grounded in third-party content (if you don't use custom data).

- Can augment design to rely more heavily on third-party content (e.g., focus on extractive designs like Google Snippets).

Catch-22

Designs/arguments more likely to grant Section 230 immunity are directly antithetical to fair use defenses for copyright claims. As we see in Anderson also tension between getting First Amendment protections or Section 230 protections.